¶ Characteristics

- EFS similar to NAS

- File Based storage meant for heavy reads and writes

- S3 is more for static files that will be written once, ready multiple times. Also, better for larger files, like video and images.

- EFS can be attached to multiple EC2 instances at once across multiple AZs.

- EBS can only be attached to a single EC2 at a given time.

- Optimized for low latency

- High level of throughput (MB/s)

- Can scale to PB of storage.

- Accessible via Mount Points that can be configured in multiple AZs.

- No need to manage any file servers (done for you)

- Replicated across AZs within a Region

- Compatible with Linux based AMI (not Windows)

- Not available on ALL regions

- Can enable encryption at rest with KMS.

- POSIX file system

¶ Scaling

- 1000's of concurrent NFS clients 10GB+ throughtput

- Grows to PB network file system automatically.

¶ Storage Class

¶ Standard

- Charged for the amount of storage you use per month.

¶ Infrequent Access (EFS-IA)

- Cheaper storage

- Slower (initial byte) read/write times

- Charged for the amount of storage space used + each read and write you make to the storage class.

Both storage classes are available in all regions where EFS is supported.

Both provide the same level of availability and durability.

¶ Performance Offerings

¶ General Purpose

- Default performance mode and is typically used for most use cases

- home directories

- general file-sharing environments

- It offers an all-round performance and low latency file operation,

- Max of 7,000 file system operations per second.

- Max IO - higher latency throughput highly parallel (big data, media processing)

¶ Max I/O

- If you need to connect a large number of EC2s

- Need to exceed 7,000 operations per second

- offers virtually unlimited amounts of throughput and IOPS.

- File operation latency will take a negative hit over that of General Purpose.

- Bursting (1TB - 50MiB/s + bursts up to 100MiB/s)

- Provisioned: set your throughput regardless of your storage size. 1GiB/s for 1TB storage

¶ Choosing

The best way to determine which performance option that you need is to run tests alongside your application. If your application sits comfortably within the limit of 7,000 operations per second, then General Purpose will be best suited, with the added plus point of lower latency. However, if your testing confirms 7,000 operations per second may be reached or exceeded, then select Max I/O.

- EFS provides a CloudWatch metric percent I/O limit, which will allow you to view operations per second as a percentage of the top 7,000 limit.

¶ Throughput modes

- measured by the rate of mebibytes.

¶ Bursting Throughput

- default mode,

- the amount of throughput scales as your file system grows. So the more you store, the more throughput is available to you.

- Maxes at 100 mebibytes per second per tebibyte of storage used within the file system.

- The duration of throughput bursting is reflected by the size of the file system itself. Through the use of credits, which are accumulated during periods of low activity, operating below the baseline rate of throughput set at 50 mebibytes per tebibyte of storage used, which determines how long EFS can burst for.

- Every file system can reach its baseline throughput 100% of the time.

- By accumulating, getting credits, your file system can then burst above your baseline limit.

- The number of credits will dictate how long this throughput can be maintained for, and the number of burst credits for your file system can be viewed by monitoring the CloudWatch metric of BurstCreditBalance.

¶ Provisioned Throughput

- allows you to burst above your allocated allowance, which is based upon your file system size. So if your file system was relatively small but the use case for your file system required a high throughput rate, then the default bursting throughput options may not be able to process your request quick enough. In this instance, you would need to use provisioned throughput. However, this option does incur additional charges, and you'll pay additional costs for any bursting above the default option of bursting throughput. That brings me to the end of this lecture, now I want to shift my focus on creating and connecting to an EFS file system from a Linux based instance.

¶ Storage Tiers

- Standard and ESF-IA (Infrequently Accessed)

¶ EFS lifecycle management

-

When enabled, EFS will automatically move data between the Standard storage class and the IA storage class.

-

This process occurs when a file has not been read or written to for a set period of days, which is configurable, and your options for this period range include 14, 30, 60, or 90 days.

-

Depending on your selection, EFS will move the data to the IA storage class to save on cost once that period has been met.

-

As soon as that same file is accessed again, the timer is reset, and it is moved back to the Standard storage class.

-

If it has not been accessed for a further period, it will then be moved back to IA.

-

Every time a file is accessed, its lifecycle management timer is reset.

- The only exceptions to data not being moved to the IA storage class is for any files that are below 128K in size and any metadata of your files, which will all remain in the Standard storage class.

-

If your EFS file system was created after February 13th, 2019, then the life cycle management feature can be switched on or off.

¶ Availablilty and Durability

¶ Standard

- great for prod

- multi-AZ

¶ One Zone

- One AZ

- Great for dev

- Backup enabled by default

- compatible with IA (EFS One-Zone IA)

- 90% Cost savings over Standard.

¶ Creating and EFS

¶ Prerequisites

- Need to ensure that you have created and configured your EFS file system, in addition to your EFS mount targets.

- You must have an EC2 instance running with the EFS mount helper installed,

- The instance must also be in the VPC and configured to use the Amazon DNS servers with DNS hostnames enabled.

- You must have a security group configured allowing the NFS file system NFS access to your Linux instance,

- You must also be able to connect to your Linux instance.

¶ EFS Security

¶ Access Control

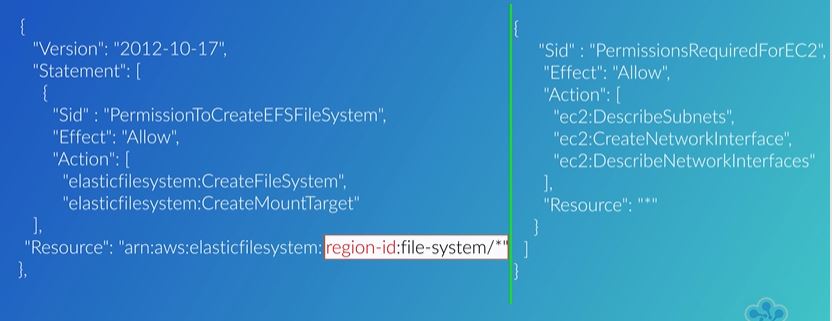

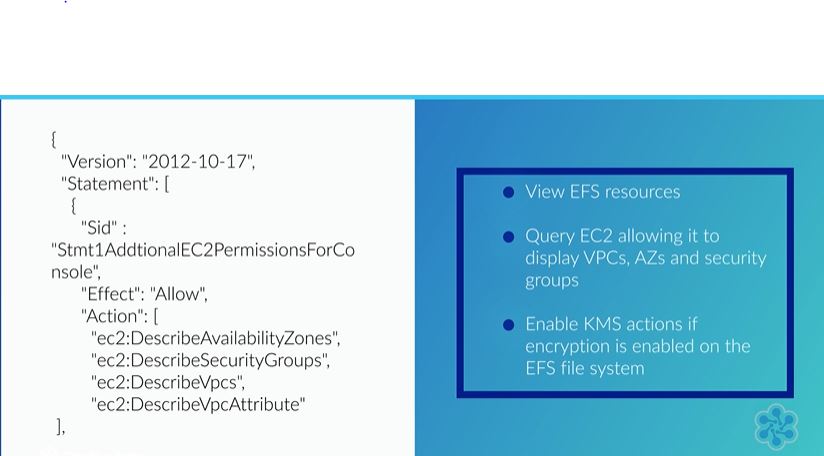

To create your EFS, you will need to allow the following permissions:

- elasticfilesystem:CreateFileSystem

- elasticfilesystem:CreateMountTarget

- ec2:DescribeSubnet

- ec2:CreateNetworkInterface

- ec2:DescribeNetworkInterfaces

¶ Example Policy

¶ Permissions

¶ Stunnel is used to encrypt in-transit data.

¶ Moving data into EFS

¶ AWS DataSync

The recommended course of action is to use another service called AWS DataSync. This service is specifically designed to help you securely move and migrate and synchronize data for your existing on-premises site into AWS Storage Services such as Amazon EFS or Amazon S3 with simplicity and ease. The data transfer can either be accomplished over a direct connect link or over the internet. To sync source files from your on-premises environment, you must download the DataSync agent as a VMware ESXi to your site. The agent is configured with the source and destination target and associated with your AWS account, and logically sits in between your on-premise file system and your EFS file system.

DataSync is also very useful if you want to transfer files between EFS file systems either within the same AWS account or cross-account and owned by a third-party. To help with the management and implementation of this transfer, AWS has created an AWS DataSync in-cloud quick start and scheduler, which can be found here on GitHub.